The title might seem a bit unusual. But let me take you back to the beginning and share how my work on a BIO299 research project led me to reflect on the role of AI in the sciences.

Right now, my supervisor and I are gathering data on the organic carbon content of certain soil samples. These soil samples were collected all over Norway from locations with varying properties.

But what do you need this data for?

Good question! The thing is, there is a large gap of knowledge about the lifestyle of so-called Ericoid mycorrhizae…

The what in the whatnot?

Stay with me: A lot of plants, here specifically the plants of the family Ericaceae (e.g., blueberry or rhododendron), live in symbiosis with fungi that help them absorb nitrogen and provide certain immunities and/or other beneficial “services” to the host plant. In return they receive sugars which the host plant produces through photosynthesis. These Ericoid mycorrhizae are microscopic and live in the hair roots of the plant. It is unlikely that you have ever had any interaction with them directly, as they exist entirely out of sight, functioning quietly beneath the soil surface in symbiosis with their plant hosts.

Ericoid mycorrhizae structures inside Vaccinium myrtillus hair root

To understand them, their host plants, and changes in their biodiversity – especially in connection with climate change – as much relevant data as possible must be collected.

Soil organic carbon has been shown to influence for example fungal community composition (Lin et al., 2023). Thus, I am sitting in the carbon laboratory and processing soil samples to get an estimate of the soil’s organic carbon per sample.

But what does that have to do with AI?

Lab work lets your thoughts flow quite freely, especially if nobody else is around. That allowed me to recapitulate conversations I had with professors and other students, as well as the information I have ingested lately about AIs, LLMs etc.

So, in the following I will tell you a bit about what I did in this project, how you estimate soil organic carbon (or soil organic matter) and what I think about the whole discussion that is currently held by students, instructors and professors about AI in the sciences along the way.

The Idea

Preceding all my work was of course a collection of soil samples, which are now stored in the walk-in freezer. That was done over a period of four days by my supervisor. Before that came hatching the idea that organic matter content might be a variable to consider. While retrieving samples from the freezer, I began to wonder: Could an AI have suggested this idea? What would that mean for research integrity?

Just consider this alternative scenario: You are searching online for papers on the topic you are writing about to narrow down your research question even more, e.g., for a grant proposal, or because your supervisor sent you a one-liner email containing only “narrow it down”. You are well versed in the topic, but you are not (yet!) the leading authority on the area. So, searching online for publications is elemental. You want to do original work, so you need to check if somebody has maybe already answered the question you pose.

Let me tell you a secret: Search engine algorithms that show you a ranking of your search results are trained on your search behaviour. Essentially, you are already using AI. Maybe not knowingly or intentionally – but still, if you are strict about it, AI aided your work. That the search engine didn’t answer in a language natural to you, but rather gave you a list of results, doesn’t matter. It is trained on you (or rather a population that shares characteristics with you) to give you exactly the results you are searching for. So, without even being aware of the fact, you use AI technology when you query google (or whatever search engine you prefer to use). But hang on, using a search engine to find information – surely that’s not cheating?

Now you have your search results. You still need to curate them and decide what to read and what to ignore. But to be honest, it is just the same process all over again: You have a bunch of data (before it was all search engine-indexed scientific publications, now your search results), and you must filter them by relevance (the same thing that the search algorithm did before, albeit on a smaller scale). Maybe add a little note to your selection that sums up the articles you intend to read. I am pretty sure that if you tell somebody that you had this handy little (non-AI) tool that does that for you, nobody would complain.

A lot of people with (and without) authority will tell you that you shouldn’t let an AI (LLM) do that work for you. Also, instructors I spoke with caution against that approach. The main argument is often the same: “You’re not going to learn if an AI does the work for you!” I disagree: You still have to ask the right question to get an acceptable result. And the only way to pose the right question is to understand the subject matter. The computer will do your grunt work (filtering and summing up articles), but it won’t understand the subject for you. If you don’t understand the subject, you cannot ask the right questions. I think that is a theme that transcends the sciences.

The Experiment

And now let’s get back to work! After some light fighting with the labelling logic and some chilly time spent in the freezer, finally all the right samples emerged and are ready to be processed. We want to find out how much soil organic matter is present in the samples.

How can we do that?

Let’s burn the samples! Well, almost. The trick is that some clever people (Howard and Howard, 1990) found out that organic matter contains around 58% carbon. So, if we first weigh our sample to burn away all the carbon and then weigh the samples a second time, we know how much carbon was in the sample just by the difference in mass. The organic matter is then the mass of the combusted carbon adjusted for the percentage of other matter.

So, in order to burn the samples, or rather heat them to 550°C so that the carbon gasses out as CO2, we need to dry them and sort out all matter that might skew our weighing results.

Soil before and after sorting

This is by far the most time-consuming part of the whole experiment. Now, here is another thing an AI cannot do: It cannot get a “feel” for the subject matter you work with in your research. Let me explain …

If you are working with something, in my case soil samples, you learn how the soil smells, handles, looks and “behaves” etc. This might be a slightly extreme example, because I mostly used my bare hands to manipulate the material. They all had a different feel, smell and often colour.

Soil samples before “burning” them

But I’ll venture that this also holds true for samples you are only remotely interacting with, like in an Eppendorf tube or a petri dish. From my former background in the computer sciences, I can tell you that this is even true for immaterial things, like algorithms or mathematical proofs in a (very) abstract way. You associate certain attributes with your subject matter or their constituents. These attributes or qualities are unique to them, and they help you to understand your subject matter.

Now you might say:

Listen, all these properties you listed are somehow quantifiable. Smell is just some chemicals, looks is just reflected light, and feels is just texture and weight! Purely quantifiable! So let’s just train an AI and let it go nuts with this stuff!

And I can only agree with you! But there are some catches – even ignoring the fact that an AI-supported machine executing the experiment would be a significant engineering and sensory challenge. I am only looking at the “information theoretical” (I am using the term very loosely) part of the process.

The first catch is that not all this knowledge is written down, or explicitly communicated. I might even go so far to say that it is partly uncommunicable in written language. Therefore, it is not available as input training data set to any AI. The second one, and by far the most important one, is that research is per definition “creative and systematic work undertaken to increase the stock of knowledge” (OECD, 2015). I won’t go into what constitutes creativity, that would be opening a whole other can of worms. But the “knowledge” part is interesting. Far from being an epistemologist myself, my take on the matter is that the pursue of knowledge is not a purely objective process.

Whaaaat?

Don’t run away quite yet. I am not crazy, though this might sound esoteric. Scientific facts are still objective! But knowledge, or better yet: the way how we increase our knowledge is at least partly a subjective process.

For the sake of simplicity let’s consider an expert carpenter with decades of experience. You can have read all the literature and maybe even know all the physics behind woodworking, I am pretty sure you agree that he knows and executes the subject of carpentry better than you, even with only a fraction of all the literary background. He probably has a fingerspitzengefühl you don’t have, and which I doubt an AI in its current implementation ever can acquire. And the carpenter might teach you a thing or two you didn’t know, i.e., there will be a transfer of knowledge.

So, while deducing facts purely logically, mixed with the right amount of randomness, like an AI does can give interesting, and even surprising results. But as far as human knowledge is concerned, you need a human to understand and communicate it, though he or she might be aided by an AI as a tool.

I could possibly write a whole lot more about this since I had ample of time to reflect on the topic while preparing the soil samples, but for the sake of brevity I will leave it at that. I recommend everybody who is interested in this topic to read up on epistemology, especially discussions about “rational intuition”.

And, as we say… the rest is left as an exercise to the reader.

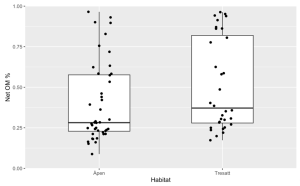

Statistics and Write-up

Let us come back to reality from all this philosophical musing. There is still work to do! After the ignition (burning) of the samples and the second weigh-in I ended up with an excel sheet with 76 datapoints. The statistics from this data didn’t yield any surprising result, but that was not expected either. Does that mean there is no result? Well, certainly nothing you could publish. But my work is only part of a much bigger project. The hope is that the data I delivered can in the future be put into a bigger context, which might reveal new and interesting facts about ericoid mycorrhizae.

A graph from the statistics of the project

Writing down the results like here, or yet better said formulating them for a potential reader, brings me to my biggest issue with the view quite a lot of people in academia have regarding AI. That is, that by using AI to aid you in writing your paper/thesis/report etc. it somehow degrades its content or makes it an inferior product. I agree that there is an art to academic writing. But do we all need to strive to master this art in perfection? Wouldn’t it be more sensible to focus on the actual research? I know that I have struggled enough before to put my thoughts into words, and am very thankful, that I have access to a tool now that can give me context sensitive suggestions on how to improve my formulations. In my opinion not everybody can be an expert communicator, nor is it desirable to strive to become one if you don’t have a natural talent and/or motivation for it.

The argument that if I let an AI write out my thoughts, then my thoughts on the matter are not clear is also moot. You still have to feed the AI with the right prompts (or questions, as discussed above) to give you an acceptable output. If you have ever tried that, you will know how hard it can be to force an AI to give you an output that fulfils your expectations.

Of course, the idea of using AI in academic writing sparks strong opinions, with some arguing that it undermines originality and integrity. But that was almost the same with the internet! Through no fault of my own I am on average twice the age of my fellow students. When I went to school, we were strongly cautioned against using the internet for any assignments when it became widely available (insert modem sounds here). You were supposed to use encyclopaedias, because everything else was not reliable information and could be seen as cheating. Well, you know how that turned out … And cheaters will be cheaters, with or without AI. There is anyway no accurate or reliable way to distinguish a text written by an AI or a human (Weber-Wulff et al., 2023) and time will tell how that influences the sciences.

Conclusion

I wrote a lot, and don’t want to steal any more time from you. What I really wanted to say are probably these two things:

- Good research doesn’t become tainted just because of a certain tool used to create the result or to communicate it, if the tool used was applied with appropriate expertise, clear awareness of its constraints, and adherence to scientific rigor.

- If you really want to get your hands dirty and become familiar with your research subjects, take BIO299. You never know what you will discover or learn by actively working with your samples and reflecting on what you are doing.

And if you want to feel really good about using AI to write and/or illustrate, the carbon footprint of the AI is apparently lower than yours (Tomlinson et al., 2024).

References

Howard, P.J.A. and Howard, D.M. (1990) ‘Use of organic carbon and loss-on-ignition to estimate soil organic matter in different soil types and horizons’, Biology and Fertility of Soils, 9(4), pp. 306–310. Available at: https://doi.org/10.1007/BF00634106.

Lin, J.S. et al. (2023) ‘Soil organic carbon, aggregation and fungi community after 44 years of no-till and cropping systems in the Central Great Plains, USA’, Archives of Microbiology, 205(3), p. 84. Available at: https://doi.org/10.1007/s00203-023-03421-2.

OECD (2015) Frascati Manual 2015: Guidelines for Collecting and Reporting Data on Research and Experimental Development. Paris: Organisation for Economic Co-operation and Development. Available at: https://www.oecd-ilibrary.org/science-and-technology/frascati-manual-2015_9789264239012-en (Accessed: 27 November 2024).

Tomlinson, B. et al. (2024) ‘The carbon emissions of writing and illustrating are lower for AI than for humans’, Scientific Reports, 14(1), p. 3732. Available at: https://doi.org/10.1038/s41598-024-54271-x.

Weber-Wulff, D. et al. (2023) ‘Testing of detection tools for AI-generated text’, International Journal for Educational Integrity, 19(1), pp. 1–39. Available at: https://doi.org/10.1007/s40979-023-00146-z.